Analyzing the Models

By Dr. Serhan Guner, Contributing Author

When civil engineers design a bridge, they often rely on structural analysis models of varying degrees of complexity.

These analyses are intended to ensure the safety of the bridge, first and foremost, but they also have implications in terms of cost and the service life of bridges.

A variety of analysis methods and software can be used to create and analyze these models. This process can be quite complex with significant amounts of input and output data.

Quality assurance (QA) and quality control (QC) are two essential processes for the quality management of bridge analysis models.

A good QA/QC program is a deliberate and systematic approach to reduce the risk of introducing errors and omissions into an analysis. The likelihood of errors is increased if the office policies and standardized procedures are not established and followed.

It is also important to have experienced, competent staff and good relationships across disciplines. The designer must understand the limitations of the analysis method and software, possess experience in developing analysis models with appropriate approaches and assumptions, and correctly interpret the results. An appropriate understanding of the expected behavior of the structure is also required to assess whether the predicted behavior represents the actual performance of the structure.

Quality processes are often affected when the staff is less experienced, and schedules leave less time for in-depth quality checks.

To document state Department of Transportation (DOT) practices related to the quality processes for bridge structural models, the National Cooperative Highway Research Program (NCHRP) established a project panel under NCHRP Project 20-05, Topic 54-11, and published Synthesis 620: Quality Processes for Bridge Analysis Models.

I was selected by the topic panel to conduct this synthesis. I gathered information through a literature review, an online survey of state DOT agencies, and follow-up interviews with selected agencies for developing case examples.

The survey was completed by 51 DOTs, including 50 states and the District of Columbia, yielding an overall response rate of 100%. Detailed case examples include the state DOTs of California, Colorado, Iowa, Louisiana and New York.

The result is more than 100 pages of synthesis that explores the quality processes for bridge analysis models among state DOTs that range in size from fewer than 10 in-house bridge engineers to more than 80.

The findings of the study offer a comprehensive look at how each DOT operates when it comes to quality processes for bridge analysis models. Each department has its own processes, and there is value in understanding and learning from them.

Analyzing Survey Responses

The survey responses indicate that the average percentage of new bridge and bridge replacement designs assigned to consultants is 59%, while the designs conducted in house by DOTs are 41%.

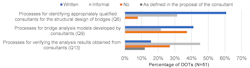

The survey asked respondents three questions on the presence of certain consultant-related bridge design activities. Three response options were given as written, informal and no process. An additional option, “as defined in the proposal of the consultant,” was included in one of the questions.

The most common written process, selected by 31 DOTs (61%), is for “identifying appropriately qualified consultants.”

The least common written process, selected only by 8 DOTs (16%), is for “verifying the analysis results obtained from consultants.” Six DOTs (12%) indicated that this process may be undertaken as defined in the proposal of the consultant.

The most common “no process” response, as selected by 19 DOTs (37%), is for the “bridge analysis models developed by consultants.”

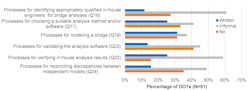

The survey asked respondents six questions on the presence of processes for certain in-house bridge design activities.

The responses indicate that the number of DOTs with written in- house processes are significantly lower than those with written consultant processes.

The largest number of DOTs with a written in-house process (for modeling a bridge) is 16 (31%) while the largest number of DOTs with a written consultant process (for identifying appropriately qualified consultants) is 31 (61%).

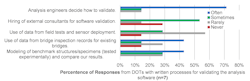

The respondents were asked if their agencies have any written or informal processes for validating the analysis software. The question defined validation as “the process of confirming that structural analysis software provides results that adequately represent the real physical behavior of the structure being modeled. Methods for validating structural analysis software include comparing predictions of the software to experimental results or benchmarks available in the literature.”

The responses indicate that only seven DOTs (14%) have written processes while 21 DOTs (41%) have no processes for validating the analysis software.

The most common response for the often frequency, as selected by five DOTs (71%), is “analysis engineer decides how to validate.” The most common response option for the sometimes frequency, as selected by four DOTs (57%), is “hiring external consultants.” The least commonly used validation method is the “use of data from field tests and sensor deployment."

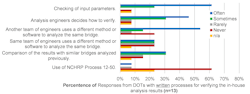

The survey asked the respondents if their agencies have written or informal processes for verifying their in-house analysis results. The question defined verification as “the process of confirming that the analysis is performed correctly and with the correct input. Methods for validating structural analysis results might include comparing the results with the results obtained from another software, tool, spreadsheet, or hand calculations.”

The responses indicate that 13 DOTs (25%) have written processes while eight DOTs (16%) have no processes for verifying their in-house the analysis results. These results demonstrate that analysis result verification processes are more prevalent than software validation processes for which 21 DOTs (41%) have no processes.

Of the 13 DOTs that have written processes for verifying their in-house analysis results, the most common response for the often frequency, as selected by eight DOTs (62%), is the “checking of input variables.”

“Another team of engineer uses a different method or software” and “analysis engineer decides how to verify” are the other common responses for the often frequency.

Exploring Case Studies

Specific information was collected from five selected state DOTs – California, Colorado, Iowa, Louisiana and New York – to expand on their processes related to the quality processes for bridge structural analysis models.

All five case example agencies have informal processes that rely on a manager, supervisor or unit leader to select appropriately qualified engineers based on their experience and availability. This decision also considers professional development needs, including training less experienced engineers or challenging more experienced engineers with unique or interesting projects. The district office associated with the bridge site commonly leads the projects.

All five case example agencies require a checker to independently verify the accuracy of design engineer’s models, calculations and results. For complex bridges, California’s DOT (Caltrans) requires a project-specific design criteria, a peer review panel and an independent check conducted by an engineer not associated with the group who has completed the original analysis.

Caltrans and NYSDOT require the use of different software in the independent check.

To overcome the challenges with finding appropriately qualified engineers in district offices, Caltrans established the seismic and special analysis branch, which is only focused on structural modeling and analysis, while NYSDOT established the Main Office Structures group with 65 design staff who only perform structural analysis and final design.

Note that NYSDOT assigns only 15% of new bridge designs to consultants as compared to the national average of 59%, while Caltrans assigns only 15% of existing bridge analyses, including load ratings, to consultants as compared to the national average of 47%.

For training engineering staff, Caltrans established a six-week “bridge design academy” while NYSDOT has a 24-session “Bridge 101” training series. Iowa’s DOT indicated the benefits of designer-checker pairing and a dedicated training budget for the professional development of engineering staff.

The report concludes by identifying current knowledge gaps, along with suggestions for future research to address these gaps. The most obvious suggestion is to develop guidelines on effective quality processes for bridge structural analysis models, specifically on complex models such as the finite element and strut-and-tie models.

The report also suggests developing a standardized system of advanced training for engineers in finite element and strut-and-tie analysis, as well as seminars or training courses for engineers on commonly misunderstood concepts like verification, validation, uncertainty, error and calibration. RB

Dr. Serhan Guner is an associate professor in the Department of Civil and Environmental Engineering at The University of Toledo.